In July 2024, the research paper titled “PCAC-GAN: a sparse-tensor-based generative adversarial network for 3D point cloud attribute compression”, co-authored by the project participants – Prof. Hui Yuan, Dr Xin Lu, Mr Xiaolong Mao and others from De Montfort University and Peking University, was accepted for publication in Computational Visual Media. This paper focuses on the development of a novel deep learning-based point cloud attribute compression method that uses a generative adversarial network (GAN) with sparse convolution layers.

Learning-based methods have proven successful in compressing the geometry information of point clouds. However, the existing learning-based methods are still not as effective as G-PCC, the current state-of-the-art method. To address this challenge,

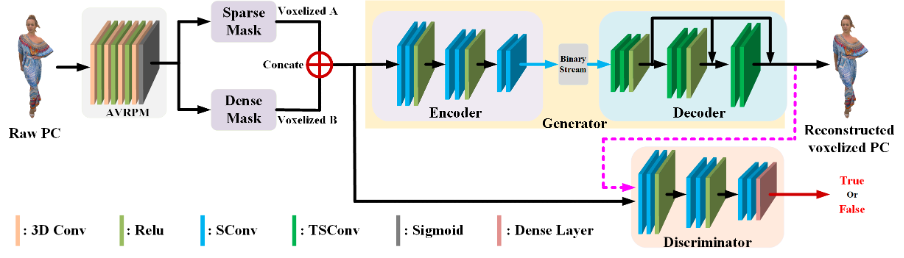

(1) We propose a novel approach for compressing 3D point cloud attributes using a GAN consisting of sparse convolution layers. To the best of our knowledge, this is the first time GANs have been applied to point cloud attribute compression.

(2) We propose a novel multi-scale transposed sparse convolutional decoder that contributes to achieving a higher compression quality and ratio in learning-based compression systems while maintaining reasonable computational complexity.

(3) We develop an adaptive voxel resolution partitioning module (AVRPM) to partition the input point cloud into blocks with adaptive voxel resolutions. This feature enables AVRPM to effectively process point cloud data

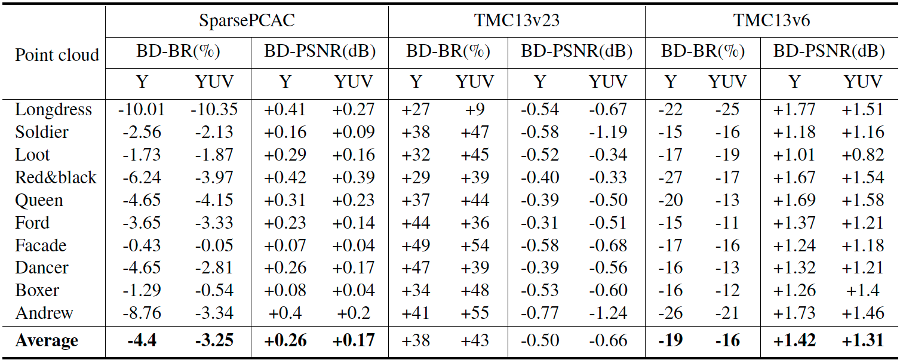

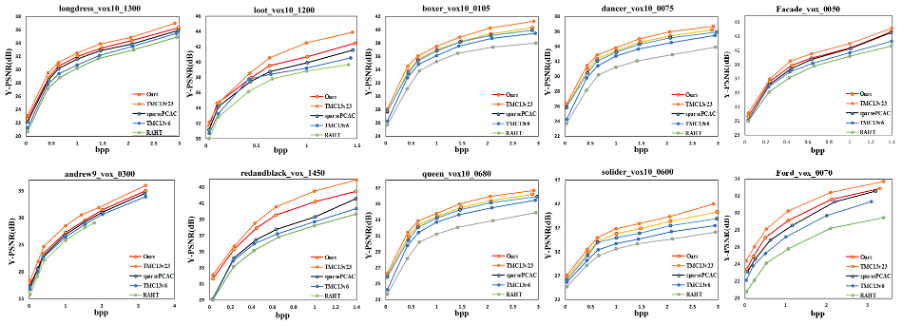

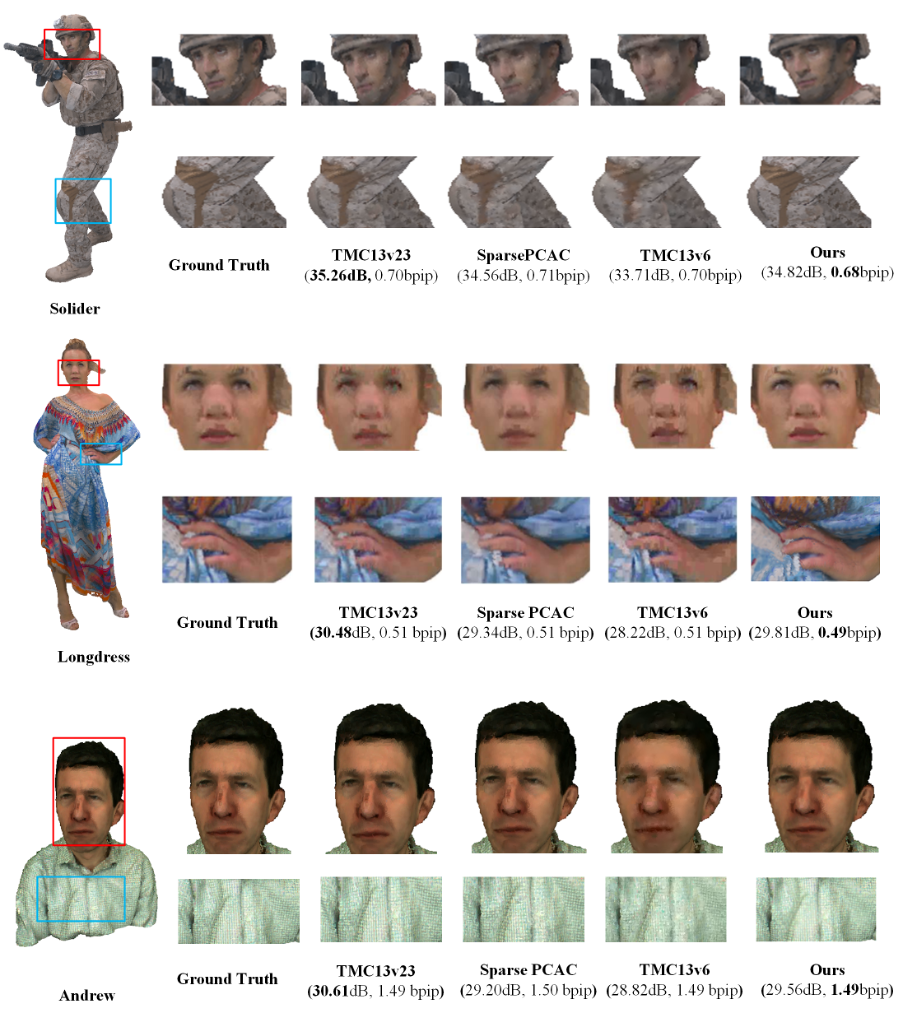

Experimental results demonstrate our method outperformed SparsePCAC [1] and TMC13v6 in terms of BDBR, BDPSNR, and subjective visual quality. While our method had inferior BD-BR and BD-PSNR performance compared to TMC13v23, it offered a better visual reconstruction quality. However, it should be noted that making a direct comparison with TMC13v23 is not entirely fair since our method uses a generative approach, placing it at a disadvantage in the comparison. This disadvantage arises due to the inherent differences in complexity and objectives between generative methods and conventional coding methods, which may affect both compression efficiency and assessment of generated content quality. We anticipate that with further improvements to filtering and cross-scale correlation for prediction, our method will outperform TMC13v23.

Overall, the proposed PCAC-GAN model shows considerable potential in improving the efficiency of point cloud attribute compression. Our innovative use of GANs and sparse convolution layers may open up new possibilities for tackling the challenges associated with point cloud attribute compression.

Reference:

[1] Wang J, Ma Z. Sparse tensor-based point cloud attribute compression. 2022 IEEE 5th International Conference on Multimedia Information Processing and Retrieval (MIPR), 2022: 59–64.